Close collaboration with NVIDIA and JEDEC standardization

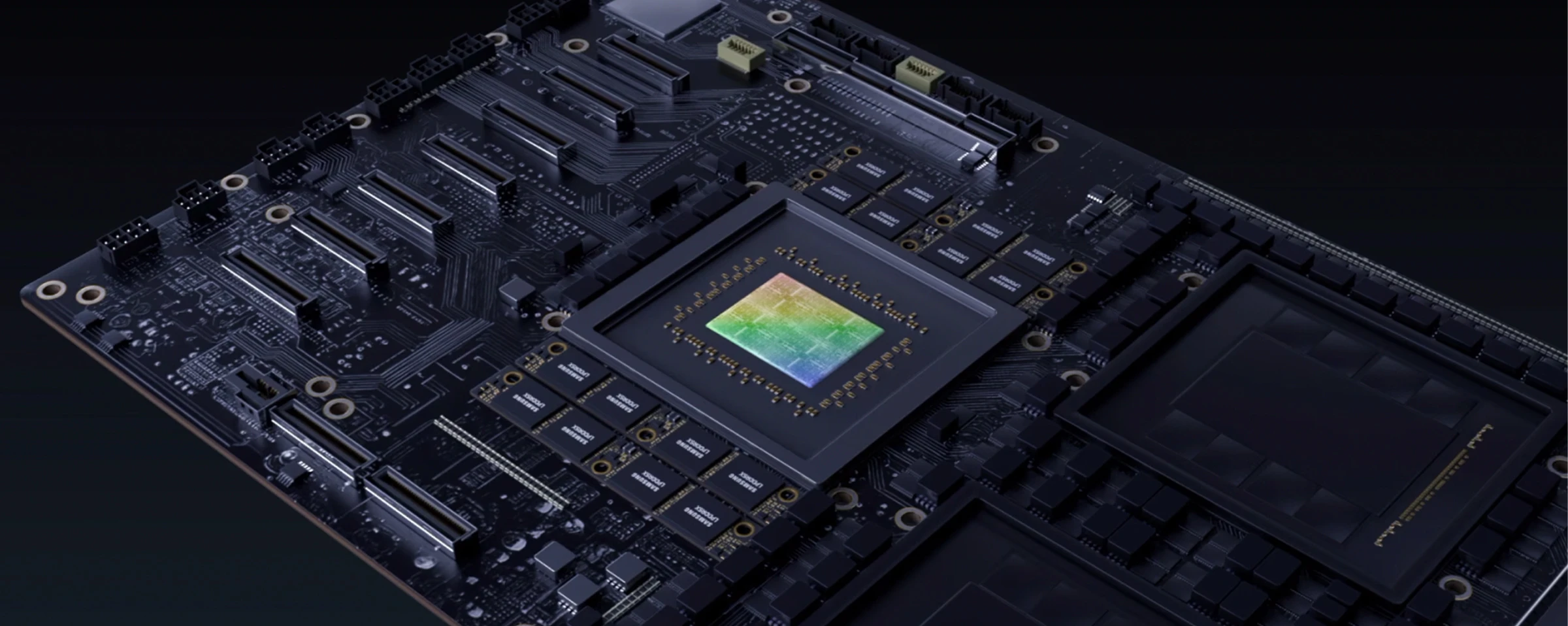

Samsung is expanding its collaboration across the AI ecosystem to accelerate adoption of LPDDR-based server solutions. In particular, the company is working closely with NVIDIA to optimize SOCAMM2 for NVIDIA accelerated infrastructure through ongoing technical cooperation — ensuring it delivers the responsiveness and efficiency required for next-generation inference platforms. This partnership is underscored by NVIDIA’s remarks:

“As AI workloads shift from training to rapid inference for complex reasoning and physical AI applications, next-generation data centers demand memory solutions that deliver both high performance and exceptional power efficiency,” said Dion Harris, senior director, HPC and AI Infrastructure Solutions, NVIDIA. “Our ongoing technical cooperation with Samsung is focused on optimizing memory solutions like SOCAMM2 to deliver the high responsiveness and efficiency essential for AI infrastructure.”

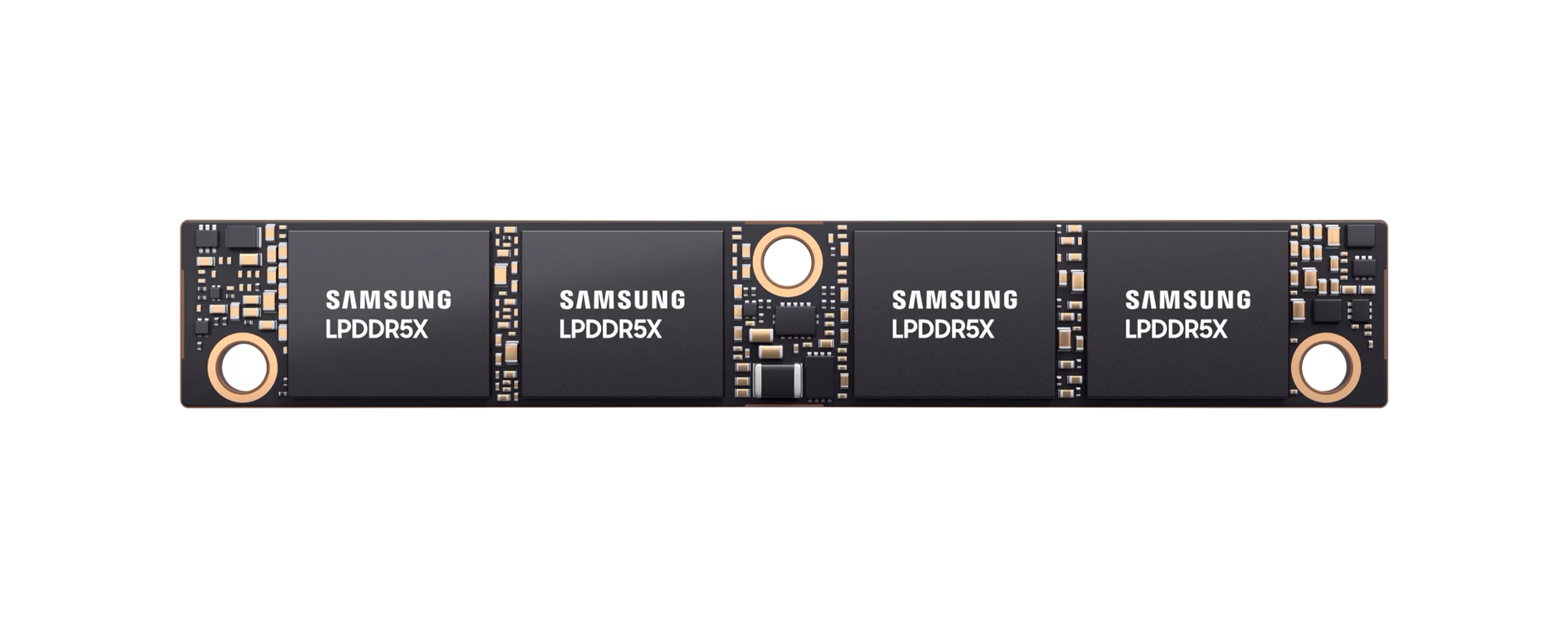

As SOCAMM2 gains traction as a low-power, high-bandwidth solution for next-generation AI systems, the industry has initiated formal standardization efforts for LPDDR-based server modules. Samsung has been contributing to this work alongside key partners, helping to shape consistent design guidelines and enable smoother integration across future AI platforms.

Through continued alignment with the broader AI ecosystem, Samsung is helping to guide the shift toward low-power, high-bandwidth memory for next-generation AI infrastructure. SOCAMM2 represents a major milestone for the industry — bringing LPDDR technology into mainstream server environments and powering the transition to the emerging superchip era. By combining LPDDR with a modular architecture, it provides a practical path toward more compact and power-efficient AI systems.

As AI workloads continue to grow in scale and complexity, Samsung will further advance its LPDDR-based server memory portfolio, reinforcing its commitment to enabling the next generation of AI data centers.